04. Implementation

Implementation: TD(0)

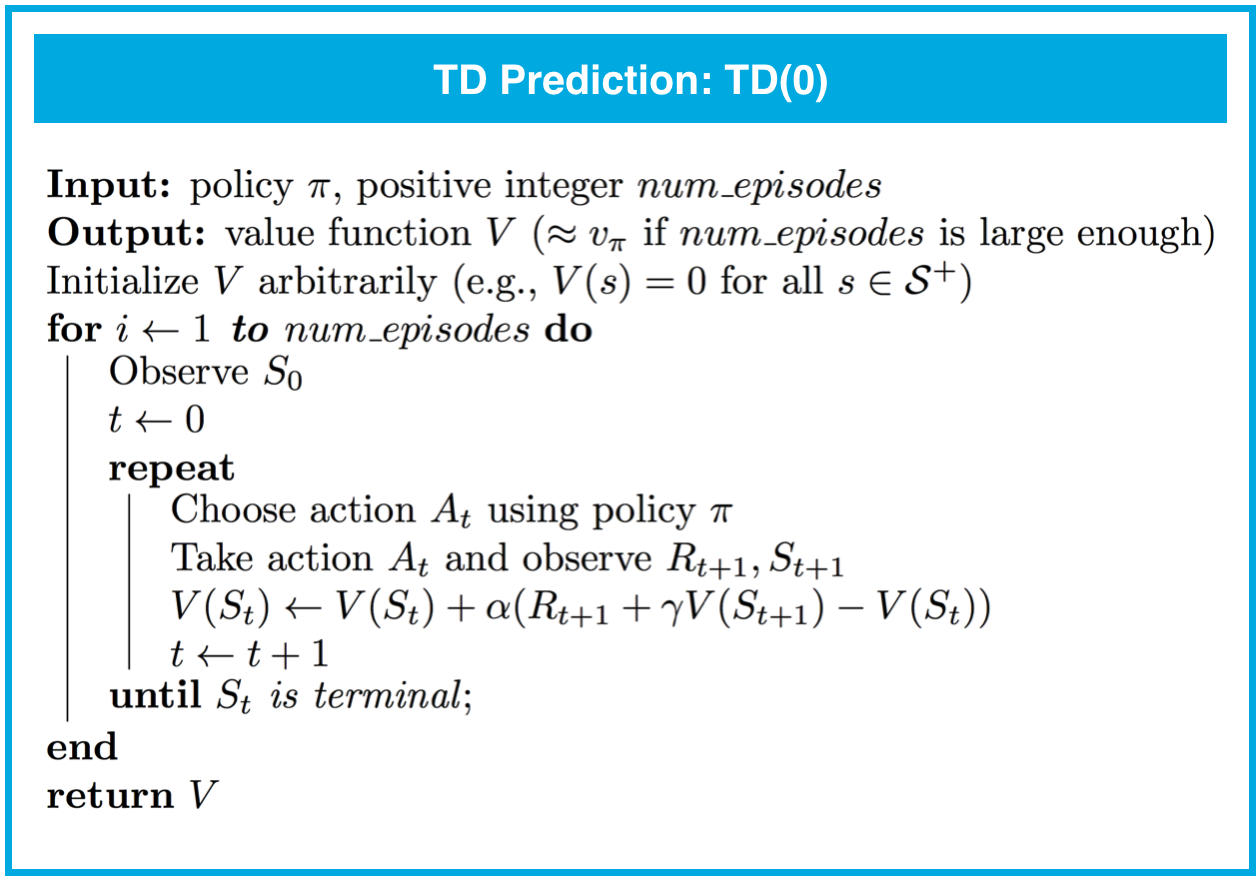

The pseudocode for TD(0) (or one-step TD) can be found below.

TD(0) is guaranteed to converge to the true state-value function, as long as the step-size parameter \alpha is sufficiently small. If you recall, this was also the case for constant-\alpha MC prediction. However, TD(0) has some nice advantages:

- Whereas MC prediction must wait until the end of an episode to update the value function estimate, TD prediction methods update the value function after every time step. Similarly, TD prediction methods work for continuous and episodic tasks, while MC prediction can only be applied to episodic tasks.

- In practice, TD prediction converges faster than MC prediction. (That said, no one has yet been able to prove this, and it remains an open problem.) You are encouraged to take the time to check this for yourself in your implementations! For an example of how to run this kind of analysis, check out Example 6.2 in the textbook.

Please use the next concept to complete Part 0: Explore CliffWalkingEnv and Part 1: TD Prediction: State Values of Temporal_Difference.ipynb. Remember to save your work!

If you'd like to reference the pseudocode while working on the notebook, you are encouraged to open this sheet in a new window.

Feel free to check your solution by looking at the corresponding sections in Temporal_Difference_Solution.ipynb.